Researchers at the University of Sassari in Italy and the University of Plymouth in the UK have developed a cognitive program able to learn language from scratch through communication with a human interlocutor. The model, called ANNABELL, surpasses the traditional ways computers “speak,” as it does not rely on pre-coded language.

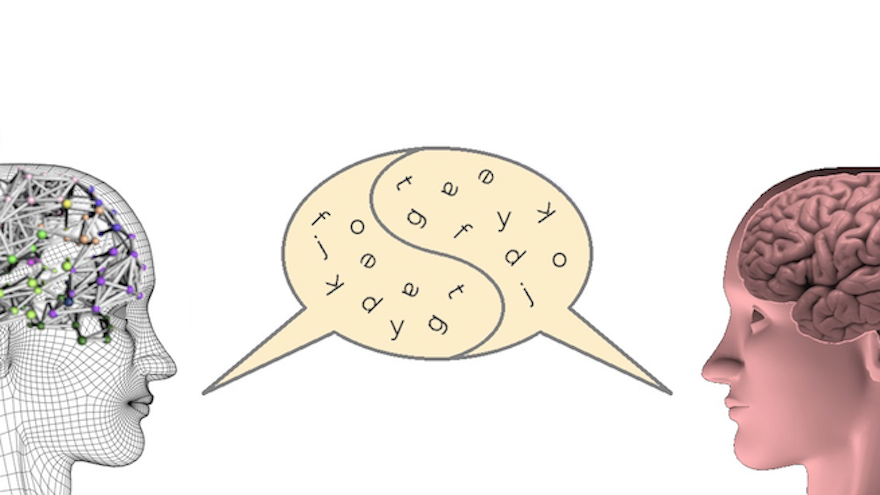

In an effort to uncover the secrets of human language development, researchers developed ANNABELL, the Artificial Neural Network with Adaptive Behavior Exploited for Language Learning. The model is made up of two million interconnected artificial neurons and is able to communicate with a human thanks to two fundamental mechanisms: synaptic plasticity and neural gating.

Also present in the biological brain, Synaptic plasticity is the mechanism essential for learning and for long-term memory, while Neural gating mechanisms called bistable neurons either transmit or block signals from one part of the brain to the other. These mechanisms allow ANNABELL to control the flow of information.

Like the human brain, ANNABELL is able to learn from the environment rather than pre-coded information installed by humans. According to the study, the cognitive model has been validated using a database of about 1500 input sentences, based on literature on early language development. It has responded by producing a total of about 500 sentences in output, containing nouns, verbs, adjectives, pronouns, and other word classes. This demonstrates its ability to express a wide range of capabilities in human language processing.